Google introduces an AI game-changer with Gemini

Last year, OpenAI, with their groundbreaking ChatGPT, shook up the world of AI. Meanwhile, Google, known as one of the pioneers in AI technology, remained somewhat out of the spotlight. But with the unveiling of game-changer Gemini, they seem poised to take a giant leap forward!

The game is on!

This week, Google introduced Gemini. Unlike current language models such as GPT-4, Gemini is a "multimodal AI model" capable of processing and understanding information from multiple sources in real time, including text, images, video, audio and code.

The introduction looks very impressive and promises applications we haven't seen before with ChatGPT or other AI tools. While the impression is given that this is a real-time demo of how Gemini works, soon after the introduction it turns out that this is not quite so. The video has been edited and there were no real spoken prompts. Google states that "all user prompts and outputs in the video are real but shortened to keep the video concise(read: among other things, the extra promps needed for this output were omitted). The video illustpricing what multimodal user experiences built with Gemini might look like. We created it to inspire developers".

Watch this 6-minute introductory video to get a first impression.

What can Gemini be used for?

Its multimodality makes Gemini a powerful tool for a wide range of applications, including:

- Natural language processing (NLP): Gemini can understand and process text, and can be used for tasks such as translations, summaries and answering questions.

- Computer vision (CV): Gemini can understand and process images and videos, and can be used for tasks such as object recognition, face recognition and pattern recognition.

- Audio processing (AP): Gemini can understand and process audio, and can be used for tasks such as speech recognition, music analysis and sound synthesis.

- Code understanding (CU): Gemini can understand and process code, and can be used for tasks such as code analysis, code generation and code assistance.

How does Gemini perform relative to other AI models such as GPT-4?

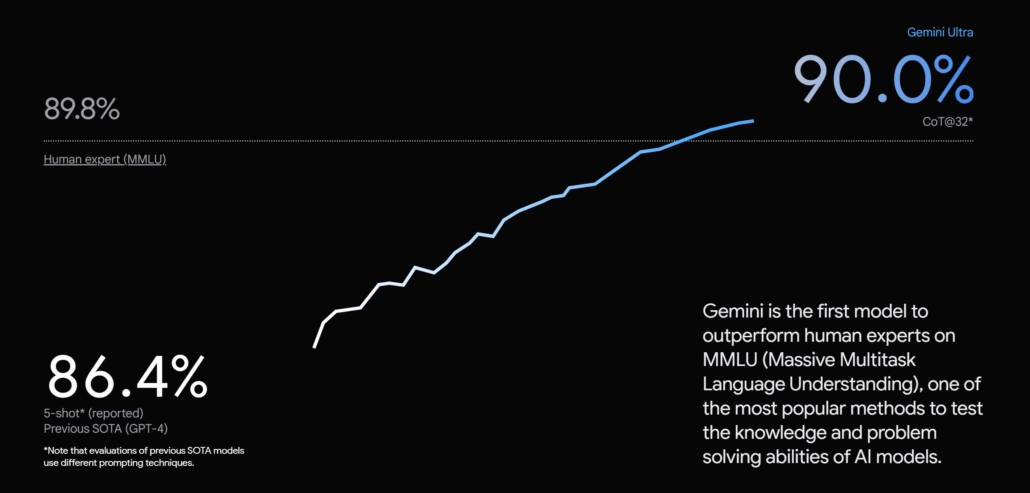

According to Google, Gemini is based on one of the largest and most advanced AI models in the world and also significantly outperforms other AI models, including GPT-4.

For example, in a natural language processing (NLP) test, Gemini scored 20% better than GPT-4, and in a computer vision (CV) test, Gemini scored 15% better than GPT-4.

With a score of 90.0%, Google also says Gemini Ultra is the first model to outperform human experts on MMLU (massive multitask language understanding), which uses a combination of 57 subjects such as math, physics, history, law, medicine and ethics to test both world knowledge and problem-solving skills.

Key differences between Gemini and GPT-4 (according to Google)

- Multimodality: Gemini is a multimodal AI model, meaning it is capable of processing and understanding information from different sources. For example, it can translate text, taking into account the context of the image associated with the text. Identify objects in an image, taking into account the text written about the object. Write code that performs a particular task, taking into account the audio instructions associated with the code. GPT-4 is a language model, which means that it can only focus on text.

- Size and complexity: Gemini is the largest and most advanced AI model in the world. GPT-4 is a large language model, but it is not as large or complex as Gemini. Gemini is based on 1.56 trillion parameters, GPT-4 on 1.5 billion parameters.

- Different versions: Gemini is available in different versions, with different capacities and capabilities. GPT-4 is currently only available in one version.

Gemini will first be integrated with the chatbot Bard, followed by other Google applications such as Pixel 8 Pro, Search, Ads, Chrome and Duet AI. The amount of applications and data Google has access to (think also Gmail, YouTube, Next camera and Google maps), gives a huge potential and unique position compared to other parties such as OpenAI.

It will first be rolled out in 170 countries outside Europe, so we will have to be patient until we can start using it in the Netherlands as well.

A side note is that Gemini's Ultra model is compared to GPT-4 and where it scores better these are only a few percentage points. The exact operation of Google's top AI model is still uncertain and is not expected to be rolled out until early 2024, while GPT-4 has been available since March 2023. OpenAI has been developing GPT-5 behind the scenes for a long time, so it will be interesting to see what the applications of this will be and when it is rolled out.

See more about the Gemini launch here.

Take a leap forward in your marketing AI transformation every week

Every Friday, we bring you the latest insights, news and real-world examples on the impact of AI in the marketing world. Whether you want to improve your marketing efficiency, increase customer engagement, sharpen your marketing strategy or digitally transform your business, "Marketing AI Friday" is your weekly guide.

Sign up for Marketing AI Friday for free.